Ethical Intelligence for Housing Stability

A doctoral research initiative exploring how secure, offline analytics can support ethical decision-making in homelessness services.

This project develops a constrained decision support framework that applies advanced analytical methods while preserving client privacy, professional judgment, and institutional accountability.

Purpose and Practice

The Mission

This initiative exists to bridge the gap between advanced artificial intelligence and strict data sovereignty. It brings social work practice and data science together to strengthen professional judgment, ensuring that ethical privacy standards are never compromised for the sake of innovation.

Our Approach

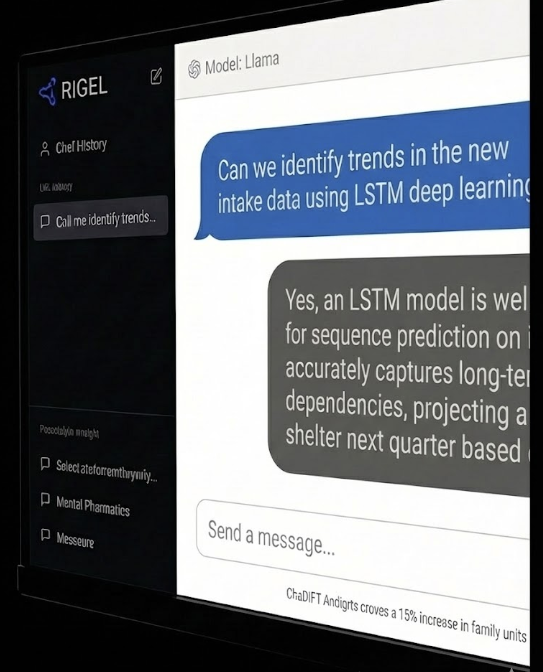

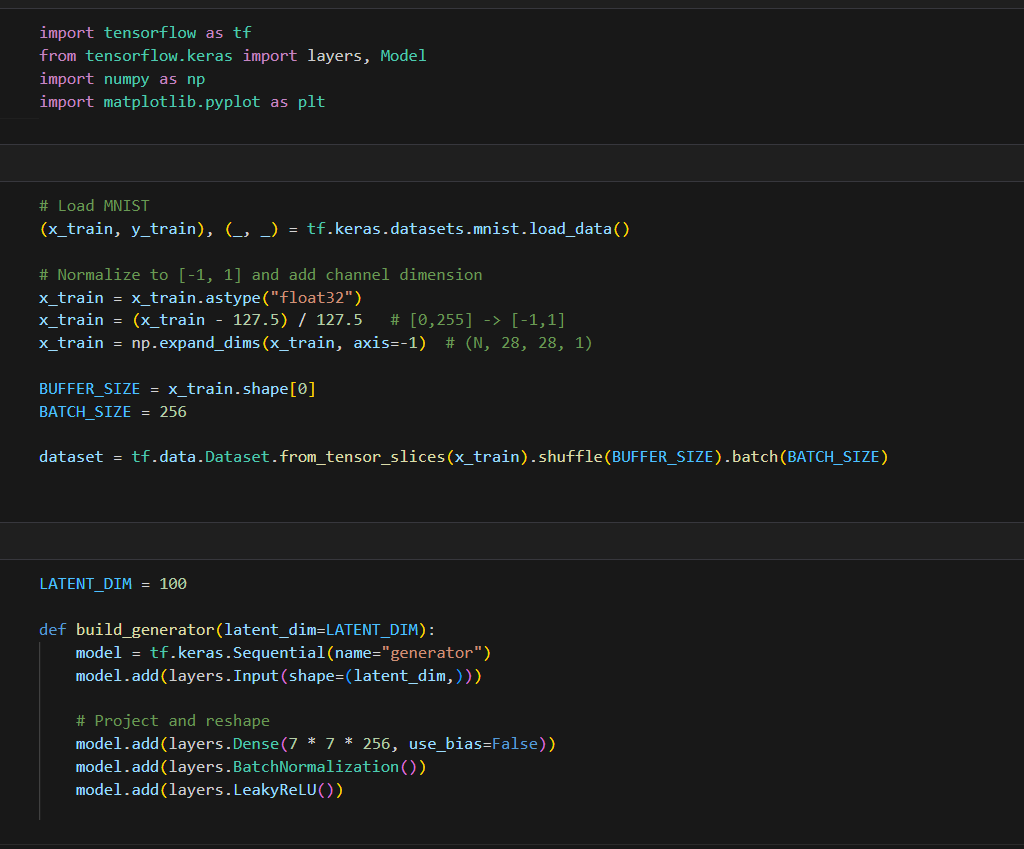

Rather than relying on risky cloud-based APIs, this approach focuses on designing secure, offline decision support systems. By hosting Large Language Models (LLMs) and deep learning algorithms entirely on on-premise infrastructure, the framework allows practitioners to query complex client data in natural language without exposing a single byte of sensitive PII to the public internet.

The Impact

By grounding advanced analytics in the ethical foundations of practice, the framework goes beyond surface patterns of housing instability. The goal is to equip frontline social work professionals with a conversational analyst that respects client dignity, preserves contextual understanding, ensures data privacy, and makes high-level data science accessible to the humans responsible for accountability.

Designing Ethical Decision Support

Preserving Context in Social Service Data

The Challenge Housing instability cannot be understood through isolated data points alone. Traditional analytic tools often require technical expertise that limits who can meaningfully engage with the data, leading to a loss of narrative context.

Our Solution We utilize Natural Language Processing (NLP) to preserve the richness of case narratives while making analytical insight accessible. By allowing practitioners to interact with information using familiar professional language, the system supports ethical analysis without stripping away meaning or context.

Secure Offline Analysis for Sensitive Data

The Challenge Social service agencies work with highly sensitive information that must remain protected and locally governed. Many existing tools require external cloud platforms or specialized workflows that exclude frontline staff and introduce privacy risks.

Our Solution Our framework integrates locally deployed models within self-contained, offline environments. This allows social workers to explore patterns and query their datasets without ever exporting information to third-party servers or relying on external technical intermediaries.

Supporting Inclusive Decision Making

The Challenge Ethical decision-making depends on who is able to participate in the process. When analytic systems are accessible only to data specialists, the nuanced insight of the frontline social worker can be unintentionally sidelined.

Our Solution By using natural language-based interfaces grounded in social work practice, we support inclusive participation across all roles. We use locally deployed language models to amplify professional judgment while ensuring that accountability remains human and shared.

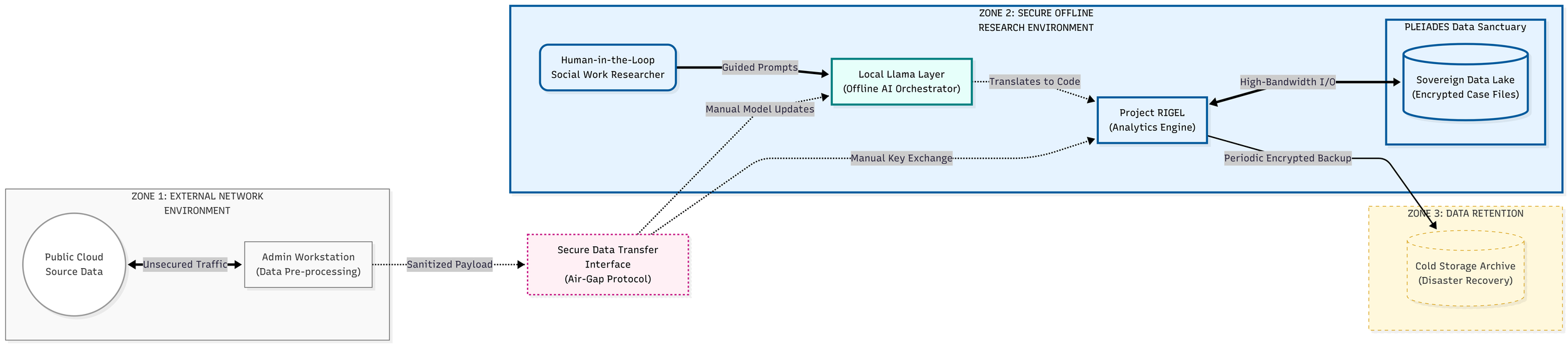

Secure Offline Analytics Architecture

This architecture illustrates how ethical and secure analytics can be operationalized within homelessness services without reliance on external cloud platforms. The framework separates internet-facing systems from a protected, air-gapped analytical environment, ensuring that sensitive casework data remains under agency control at all times.

Within this controlled environment, high-performance computing resources support advanced analytical methods while preserving data integrity, auditability, and professional accountability. Human oversight remains central to the process, with social workers directly interacting with analytical outputs rather than automated decision systems. Periodic encrypted archival processes provide resilience and continuity without compromising confidentiality.

Figure 1: The Secure Data Lifecycle

How It Works: This architecture enforces a strict physical separation between public networks and sensitive client data. Through architectural containment and offline control, it ensures privacy, governance, and accountability throughout the analytical process.

Zone 1: Intake & Preparation (Grey): Data from multiple sources is gathered and prepared within a standard office environment for secure transfer. This stage supports validation, formatting, and integrity checks prior to ingestion, without performing analytical processing on sensitive case information.

The Air Gap Protocol (Pink): Prepared datasets are transferred physically using a secure hardware interface into the protected research environment. This air-gapped process ensures there is never a digital connection between external networks and the analytical systems where sensitive data is used.

Zone 2: Sovereign Analytics (Blue): Within the protected sanctuary, the full dataset, including sensitive case information, is analyzed using locally deployed analytical and language-based tools. High-performance analysis occurs entirely offline, ensuring that comprehensive insights are generated while data remains under agency control.

Zone 3: Resilient Archive (Yellow): Encrypted backups of analytical data are periodically transferred to an offline cold storage archive for long-term retention and disaster recovery, preserving completeness without introducing external connectivity.

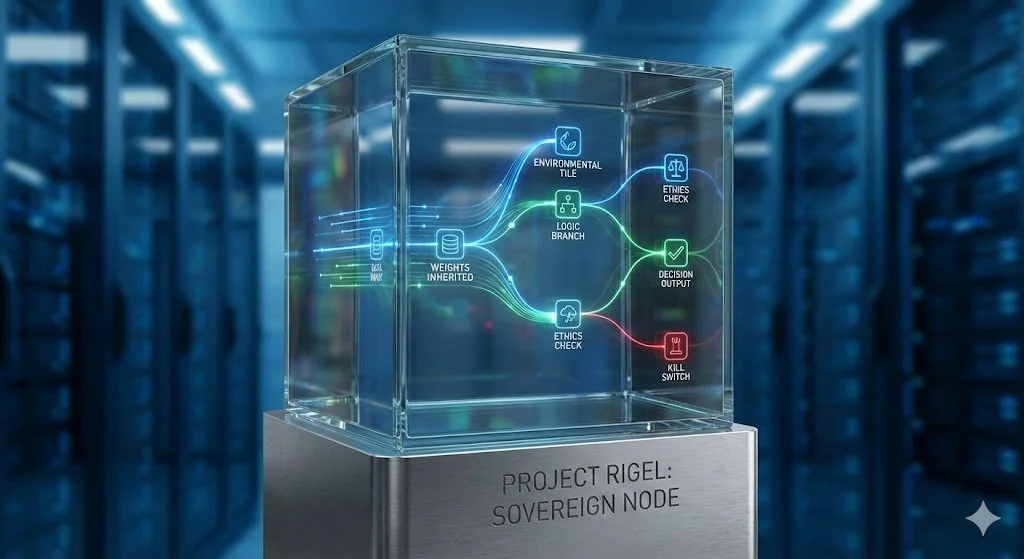

The Glass Box Architecture

Transparency as a Clinical Obligation

In homelessness services, a Black Box AI system is not a viable option. When analytical outputs influence housing decisions involving Personally Identifiable Information (PII), the licensed professional responsible for that client has both an ethical and legal obligation to understand why. Regulatory frameworks including HIPAA, CCPA/CPRA, and HUD HMIS standards do not permit consequential determinations about individuals to be made without a traceable, auditable logic path. Project Rigel is built on a Glass Box architecture that treats transparency as a foundational clinical standard.

Every output includes a readable logic path. When the system identifies a client as high-risk, the caseworker sees the exact drivers, service gaps, trajectory volatility, or demographic intersectionality. Every risk score is traceable to a tangible, auditable trigger.

This architecture maps directly to HIPAA audit trail requirements and CCPA client rights obligations, including the right of a client to understand how their data informed a determination. Project Rigel functions as a high-fidelity analytical consultant while the licensed professional, the human-in-the-loop, retains final clinical authority through a built-in manual override at every decision point.

User Experience (UX) Tiles

A Workflow-First Interface for Social Work Practice

Social workers are trained in practice, not in technology. Requiring a licensed professional to formulate precise AI prompts creates an a lack of inclusivity that limits who can meaningfully engage with Project Rigel.

The UX Tiles interface removes that barrier entirely. Each tile is a purpose-designed workflow module built around a specific social work task and tuned to LAHSA and participating CoC agency datasets. A caseworker selects the tile that matches their current need, such as Housing Stability Scoring, Resource Utilization Mapping, or Trajectory Risk Review, and the system handles the rest.

Because each tile operates within a defined analytical scope, outputs are consistent, comparable, and uniform across cases, caseworkers, and agencies. That standardization supports equitable practice, strengthens audit readiness, and creates a foundation for cross-agency research.

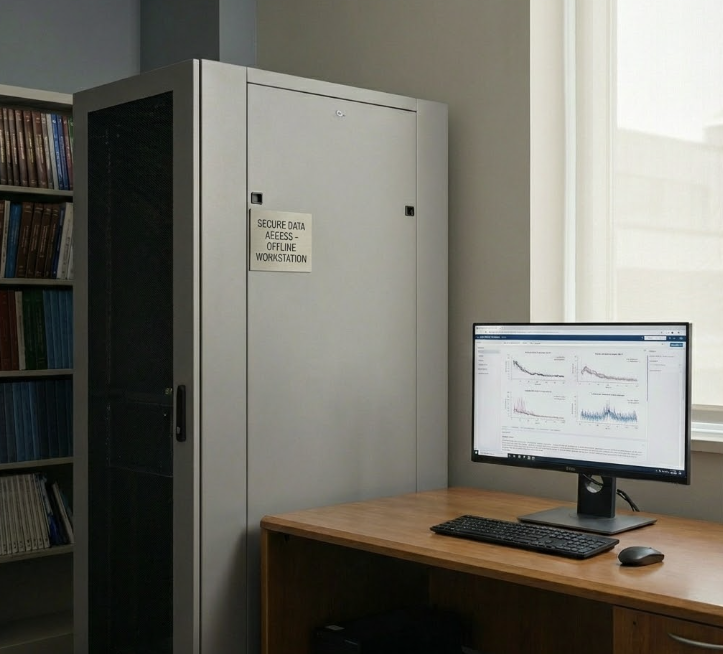

Secure Offline Computing for Ethical Research

Frontline social workers currently face a technological dilemma. They need the predictive power of modern AI to navigate complex client histories, but strict privacy laws prohibit uploading sensitive case files to public cloud servers. Standard office workstations simply lack the hardware power to run these advanced models locally.

This initiative seeks to resolve that tension by proposing the deployment of a dedicated, high-performance offline infrastructure. By bringing the computation inside the secure perimeter, the project aims to empower practitioners to utilize Deep Learning and Natural Language tools safely. If funded, this system is designed to provide the necessary architecture to give social workers access to advanced decision support tools without ever exposing client data to external risks."

System Capabilities

Local Generative AI: On-premise language model orchestration without internet dependency.

High-Performance Compute: Dual-GPU acceleration for deep learning and temporal analysis.

Encrypted Offline Storage: 80TB encrypted offline data lake for longitudinal datasets.

Zero-Trust Architecture: Physical air-gap isolation with hardware-enforced security protocols.

These capabilities are intentionally integrated to support ethical analysis without external connectivity or delegated decision-making.

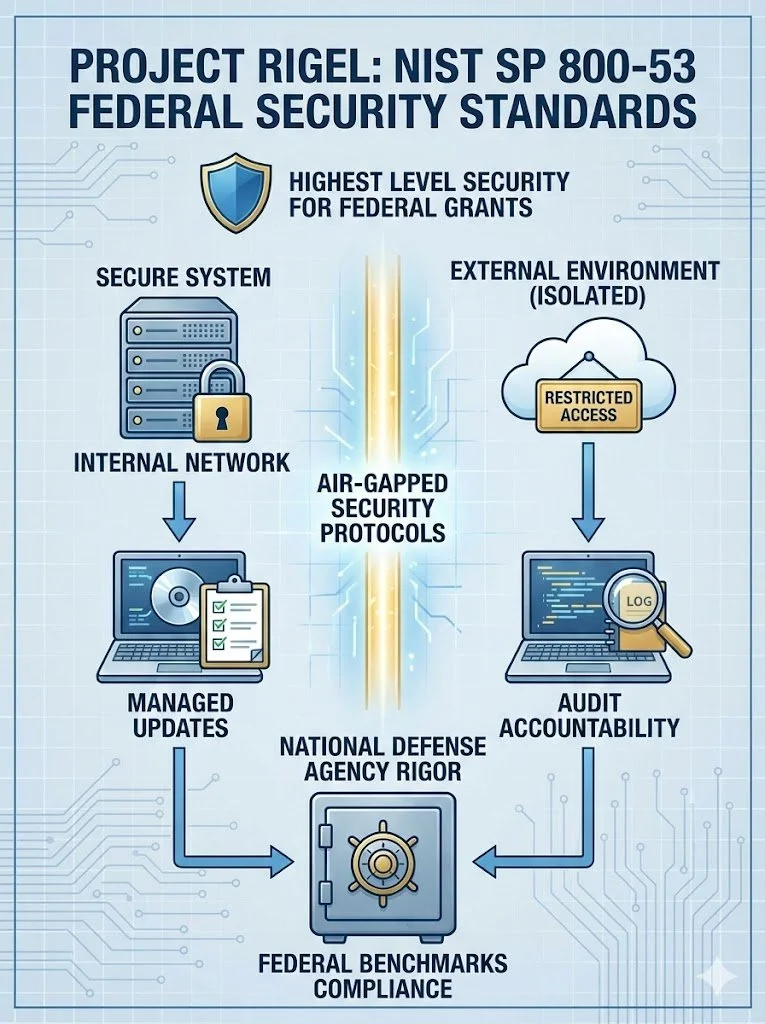

Compliance Framework for Project Rigel

While Project Rigel is technically air-gapped to maximize data sovereignty, we treat compliance as a multi-layered framework. We are designing for HIPAA and SOC 2 for security, but more importantly, we are aligning with California’s CCPA/CPRA to automate the 'Right to be Forgotten.' Furthermore, our architecture follows NIST 800-53 protocols for secure manual updates to ensure we meet federal grant standards for social service data.

HIPAA & Data Privacy

Project Rigel will be designed to handle the sensitive intersection of social work and healthcare data. By adhering to HIPAA standards, the system will implement rigorous technical safeguards.

End-to-end encryption and strict access controls will protect any Protected Health Information (PHI) utilized within our predictive models.

This will allow clinical data to be leveraged for better outcomes without risking patient confidentiality.

California Privacy Rights (CCPA/CPRA)

As a California-based initiative, Project Rigel will prioritize the CCPA/CPRA.

Our "Right to be Forgotten" framework will function as a core technical commitment.

We will provide verifiable processes that will allow individuals to request the deletion of their data from our sovereign nodes, ensuring that "Digital Dignity" is maintained throughout the entire data lifecycle.

NIST SP 800-53

(Federal Security Standards)

For the highest level of security required by federal grants, we will utilize the NIST 800-53 framework.

This will provide the roadmap for our proposed air-gapped security protocols, specifically governing how the system will manage updates and audit accountability.

By following these federal benchmarks, we will ensure that Project Rigel meets the same security rigor used by national defense agencies.

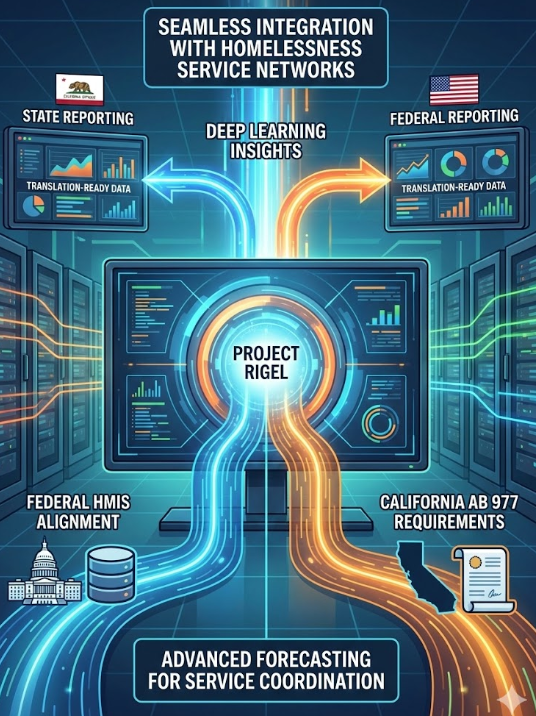

Federal HMIS Alignment (AB 977)

To ensure seamless integration with existing homelessness service networks, Project Rigel will align with HMIS data standards and California’s AB 977 requirements.

This alignment will ensure that our deep learning insights remain "translation-ready" for state and federal reporting, allowing our advanced forecasting to support existing service coordination efforts.

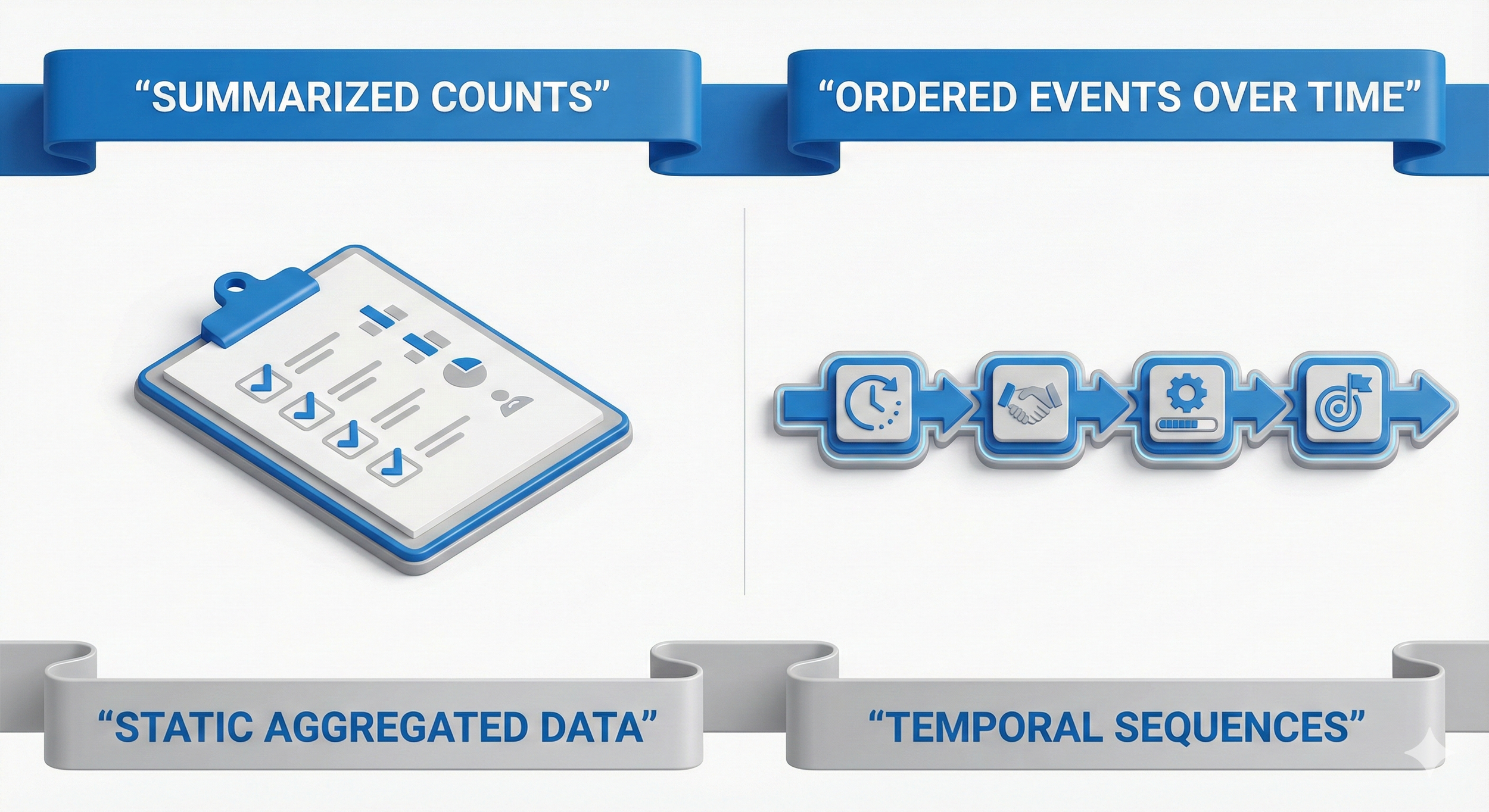

Divergent Data Representations

By holding population and geography constant, we rigorously test whether sequence-based AI provides additional predictive value

over traditional regression in predicting exits to permanent housing

Both representations describe the same clients and outcomes. The difference lies in whether information is summarized into totals or preserved as ordered events over time.

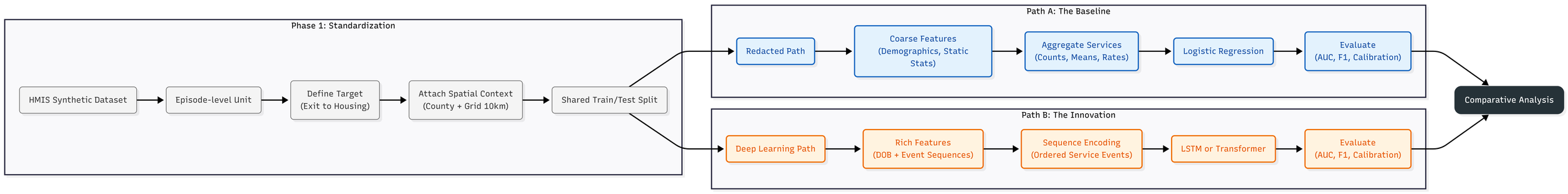

The Experimental Architecture

The following workflow visualizes the study’s rigorous "Dual-Pathway" design. To strictly isolate the value of temporal learning, the process begins with a shared initialization phase, ensuring that both models operate on identical cohorts and spatial baselines.

From this common foundation, the methodology bifurcates: the linear path adheres to traditional redaction and aggregation constraints, while the deep learning path utilizes secure, sequence-based encoding. Both pathways reconverge for a direct, metric-for-metric performance evaluation.

The Shared Foundation (Grey Nodes)

Standardization: Every experiment begins here. Both models are fed the exact same raw data and are tested against the same "Exit to Housing" definition. This ensures that any difference in results is due to the method, not the math.

This shared foundation ensures that any performance differences reflect representational and modeling choices rather than differences in data access, geography, or evaluation criteria.

Path A: The Baseline (Blue Pathway)

The Current Standard. This path represents how most agencies currently analyze data by compressing services into totals that remove timing and order.

Input: Redacted, aggregate counts (e.g., "Three Shelter Stays").

Method: Traditional Logistic Regression.

Goal: To see how much we can predict from a point-in-time (PIT) snapshot of summarized totals.

Path B: The Innovation (Orange Pathway)

The Deep Learning Approach.

This path utilizes the full richness of the data while maintaining privacy.

Input: Complete event sequences (e.g., “Shelter —> Clinic —> Housing”).

Method: LSTM / Transformer Neural Networks.

Goal: To capture the "story" of the client's journey that aggregate counts miss.

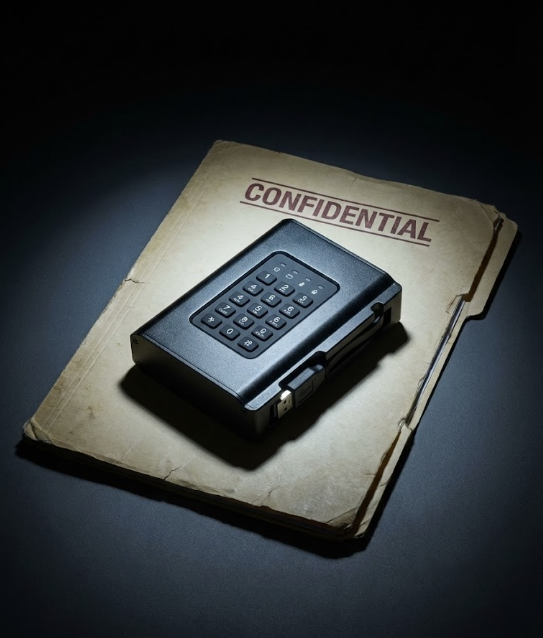

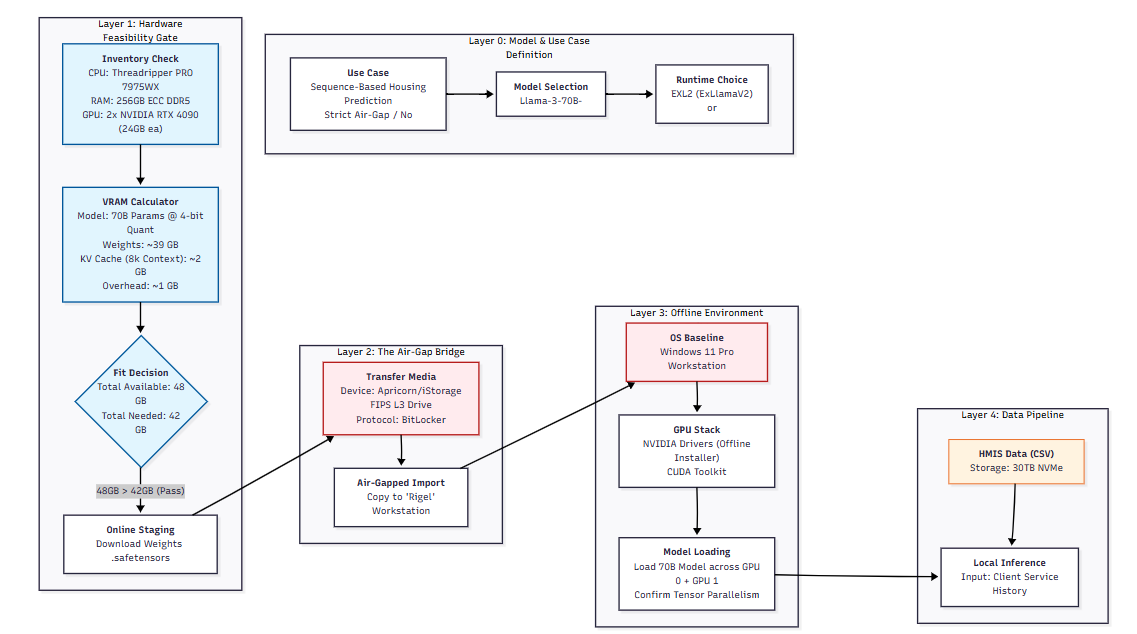

The Rigel Protocol: Layered Operational Security

This is the blueprint of the 'Third Option”, a strict operational protocol divided into four layers.

Layer 1 (The Gate): We calculate the exact VRAM requirements to ensure the model fits locally. If the hardware cannot support the required computations, deployment does not proceed.

Layer 2 (The Air-Gap): This is the critical security step. Data enters via hardware-encrypted media (Apricorn), ensuring no physical connection to the outside world.

Layer 3 (The Clean Room): This is the Offline Environment itself. Here, we configure the Windows 11 workstation with networking physically disabled and load the local NVIDIA drivers and CUDA toolkit. It functions as a digitally isolated secure environment..

Layer 4 (The Inference): Finally, the HMIS data meets the model. The 'Local Inference' happens here, completely isolated from the internet."

The Performance Gap

Hardware Constraints for On-Premise AI Intelligence

A central challenge in deploying ethical AI within social service agencies is the disparity between standard office hardware and the computational requirements of modern Deep Learning. While traditional administrative software runs efficiently on standard CPUs, Large Language Models (LLMs) and deep learning networks have specific hardware constraints that demand specialized high-performance Graphics Processing Units (GPUs).

The Memory Constraint (VRAM)

Deep Learning models must be stored in Video Memory (VRAM)

The Requirement: To locally host a 70-billion parameter model (such as Llama-3-70B) for secure inference, the hardware requires approximately 40GB to 48GB of dedicated VRAM.

The Gap: Standard agency computers typically utilize shared system memory with no dedicated VRAM. Attempting to load a model of this size on standard hardware would result in an immediate system failure (out of memory).

The Proposed Architecture: The Project Rigel design specifies Dual NVIDIA RTX 3090 GPUs to establish a combined 48GB VRAM pool. This specific configuration is required to physically accommodate the model's weight matrices within the local offline environment.

Inference Latency (The Time Barrier)

The utility of an AI system depends on inference speed which is the time it takes to generate a response.

CPU Limitation: Running complex models on a standard Central Processing Unit (CPU) forces sequential processing. Benchmarks suggest that generating a single complex response on a CPU can take minutes rather than seconds, making real-time casework impractical. This latency would render the tool impractical for real-time case management.

Proposed Rigel Acceleration: The proposed Dual RTX 3090 configuration is designed to be significantly faster. It is projected to achieve 20–25 words per second, a 5x to 8x speed increase over standard research tools. This ensures the system feels conversational and fluid, rather than slow and robotic.

The Privacy-Compute Trade-off

Agencies typically resolve these hardware limitations by utilizing commercial Cloud Computing services.

The Current Risk: Using cloud-based GPUs requires the transmission of data to external servers for processing, which introduces potential exposure points for sensitive client information.

The Local Alternative: By establishing high-performance local GPU infrastructure, Project Rigel ( a proposed offline AI infrastructure designed specifically for high-risk, high-sensitivity social service environments) is designed to eliminate the need for external transmission. This approach would allow agencies to access high-speed computational power while maintaining strong data sovereignty within the physical facility

Evaluation Strategy

Methodological Fidelity

To ensure the comparison reflects real-world research practices, each model is evaluated within its native environment.

The Baseline: Follows standard HMIS conventions, using aggregated, redacted data analyzed in spreadsheet-based environments (Excel).

The Innovation: Follows modern data science workflows, using non-redacted synthetic sequences analyzed in an air-gapped High-Performance Computing (HPC) server with dedicated multi-GPU architecture designed for intensive tensor processing.

The Shared Target

Both models answer the exact same question:

"Can we accurately predict an exit to permanent housing?"

By locking the client cohort, geographic context, and train/test splits, we ensure that any difference in performance is attributable strictly to the methodology (Snapshot vs. Story), not the population.

The Evaluation Framework

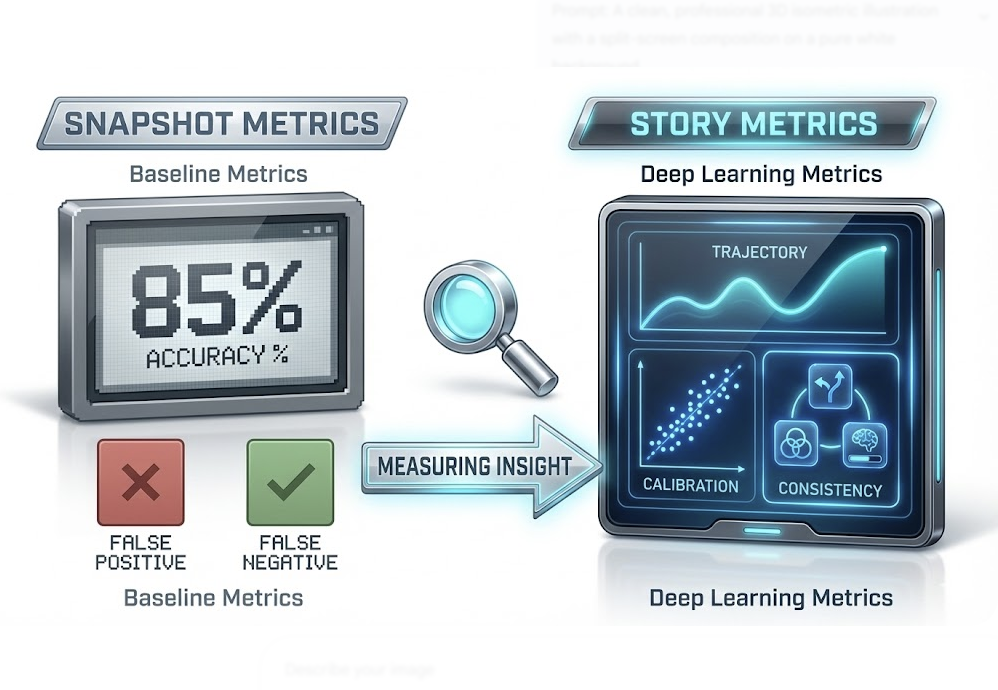

Baseline Metrics (The Snapshot)

Here, we evaluate the static approach using familiar regression-style measures:

Predictive Accuracy: The overall success rate in classifying housing exits.

False Positives: identifying clients flagged as "High Risk" due to high historical counts who are actually stabilizing.

False Negatives: Clients who may be ready for housing but are missed because their total service counts appear low.

Deep Learning Metrics (The Story)

Evaluating the sequence approach with a focus on temporal nuance:

Trajectory Sensitivity: Can the model distinguish between a client spiraling into crisis versus one climbing out of it?

Calibration:Do the predicted probabilities line up with what actually happens across the client’s journey?

Subgroup Consistency: Ensuring that performance gains hold true across different demographics, rather than optimizing for a single group.

The Comparative Goal

We aim to understand where information is lost.

By comparing these workflows side-by-side, we quantify exactly what insight is gained when time is preserved, and what is lost when analysis is restricted to static summaries.

The Comparative Framework: Baseline vs. Innovation

Benchmarking Statistical Rigidity against Temporal Fluidity

Core Concept

Before deploying complex neural architectures, we must establish a performance baseline. This phase of the study compares a traditional, interpretability-focused approach against a sequence-aware deep learning model using the exact same dataset.

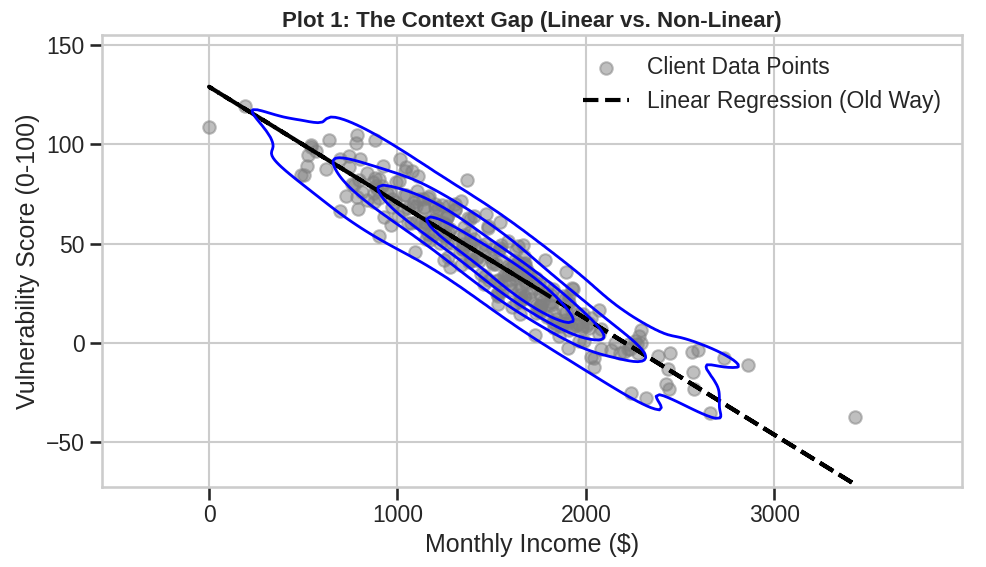

Plot 1: The Context Gap

Why "above average" income does not always mean "safe."

The Revelation: In a traditional Linear Model, the system assumes that Money = Safety. It draws a straight line saying, "If you have income (X-axis), your Vulnerability Score (Y-axis) must be low."

The Insight: The AI sees the blue islands (the contours). It identifies clients who might make $1,500/month (high income) but still have a vulnerability score of 80 (high risk) due to things money can't fix immediately like eviction history, disability, or lack of legal status.

The Y-Axis represents the 'Severity of Need' (similar to a VI-SPDAT score). While traditional models assume earning money automatically lowers this score, the AI reveals that income does not always cure vulnerability

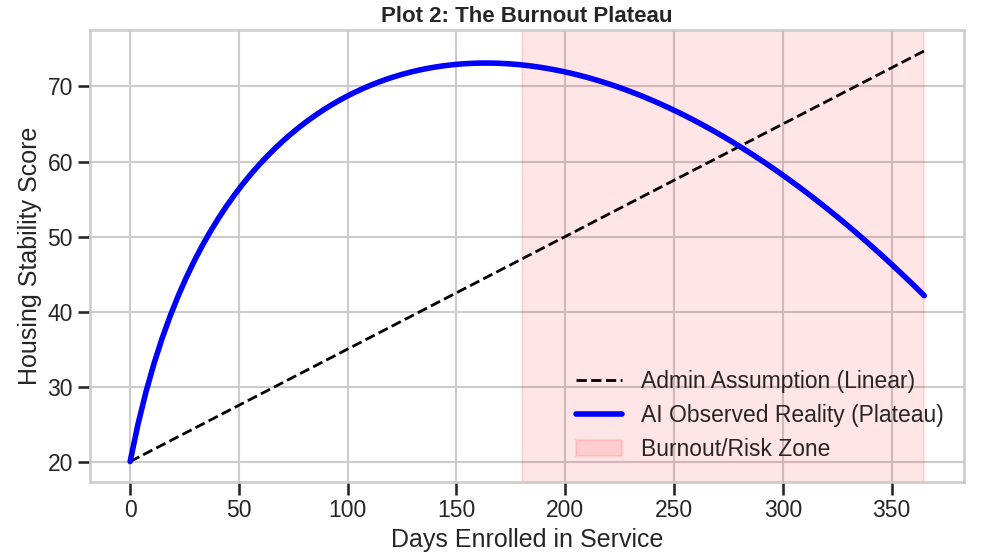

Plot 2: The Burnout Plateau

More time in the system does not equal better outcomes

The Revelation: Traditional administrative logic assumes that every day a client spends in a program, they get a little bit better (the black line). The AI analysis reveals a dangerous plateau effect (the blue curve). After about 180 days, client progress often stalls, and risk begins to climb again due to system fatigue.

The Insight: Administrators often operate on the linear assumption (the black dashed line): the belief that the longer a client remains enrolled in a support program, the more stable they become. However, the AI analysis reveals a critical plateau effect (the blue curve). We see that client progress typically spikes early but stalls significantly around Day 180. This suggests that simply extending program length does not improve outcomes; instead, it highlights a need to front-load intensive resources in the first six months before service fatigue sets in and the risk of dropout increases.

X-Axis (Time): The number of days the client has been enrolled in the program.

Y-Axis (Stability): A measure of housing retention and self-sufficiency.

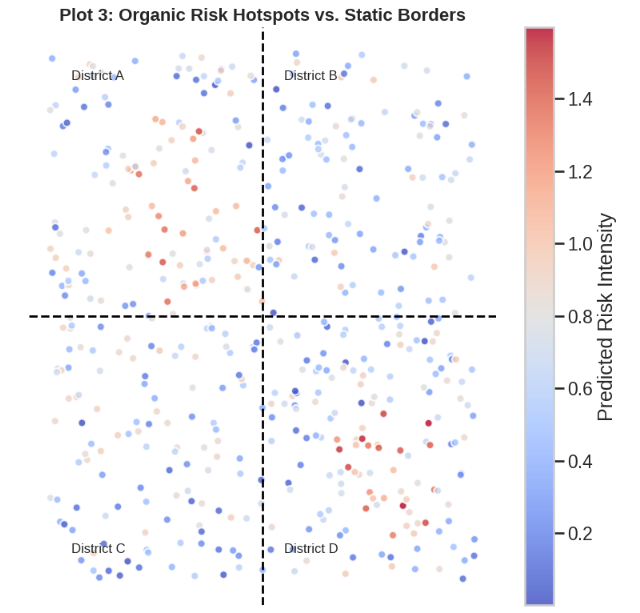

Plot 3: Organic Risk Hotspots

Homelessness does not respect City Council districts

The Revelation: We typically fund services based on zip codes or district lines (the grid). But human crises are organic. The AI ignores these invisible borders and maps risk clusters based on actual client movement.

The Insight: We typically fund services assuming need is spread evenly across a zip code or district (the black grid). But human crises are organic. The AI ignores artificial borders and maps risk clusters based on actual client movement (the red/blue heat).This map transforms resource allocation from general to surgical. Instead of spreading resources thinly across an entire district, the AI allows agencies to pinpoint micro-hotspots of need. This ensures that outreach teams are deployed exactly where the crisis is densest, maximizing the impact of every dollar spent.

The Grid: The black lines represent artificial City Council or Service Provider districts.

The Heatmap: The red and blue areas represent actual concentrations of high-risk clients.

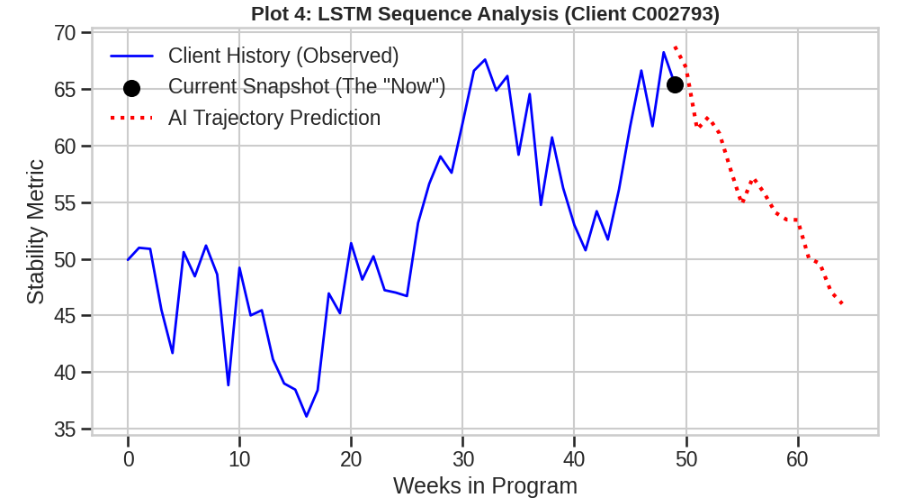

Plot 4: The Trajectory vs. The Snapshot

Why a stable day does not always mean a safe future

The Revelation: If a caseworker looked at this client’s file today (the black dot), they would see a low stability score and might recommend discharge or penalty. But the LSTM model watches the movie, not the photo. It sees the history of resilience (the blue line) and predicts an upward recovery (the red line).

The Insight: This plot illustrates the danger of the snapshot view. If a case manager looks at this client's file today (the black dot), they see a decent stability score and might assume the case is going well. However, the Deep Learning model views the client’s history as a movie, not a photo. By analyzing the volatility in the past (the blue line), the AI correctly identifies that this client is on a downward path. The descending red line acts as an early warning system, predicting a future drop in stability before it happens. This allows the caseworker to intervene now, rather than waiting for the client to fall back into homelessness.

Y-Axis (Stability Metric): 0 = Street Homelessness; 100 = Permanent Self-Sufficiency.

The Trend: Even though the client is currently at a "60" (Stable), the AI predicts a drop to "40" (At Risk) within 4 weeks.

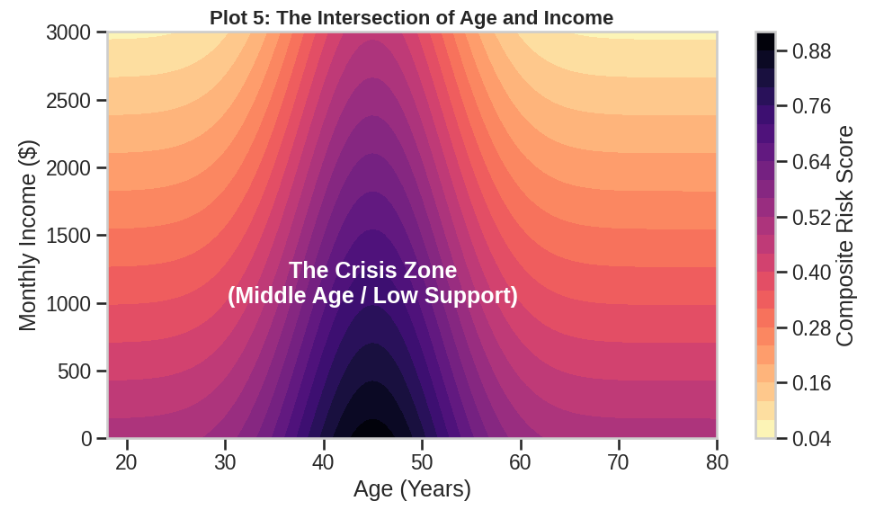

Plot 5: The Demographics of Intersectionality

The invisible crisis of the middle-aged adult.

The Revelation: Standard reports track seniors and low income as separate buckets. This heatmap reveals a crisis zone (dark purple) specifically for adults aged 40–55. They are too young for senior housing but too old for youth programs, leaving them with the fewest safety nets.

The Insight: Standard demographic reports usually separate clients into broad buckets like youth or seniors. This heatmap reveals a specific, invisible crisis zone (the dark purple area) involving adults aged 40–55 with low income. These individuals are statistically the most vulnerable because they fall into the service gap as they are too old for Transition Age Youth (TAY) programs but too young for Senior Housing subsidies. The AI model highlights this group not just as low income, but as uniquely fragile due to a lack of age-specific safety nets.

X-Axis (Age): The client's age in years.

Y-Axis (Income): The client's monthly income.

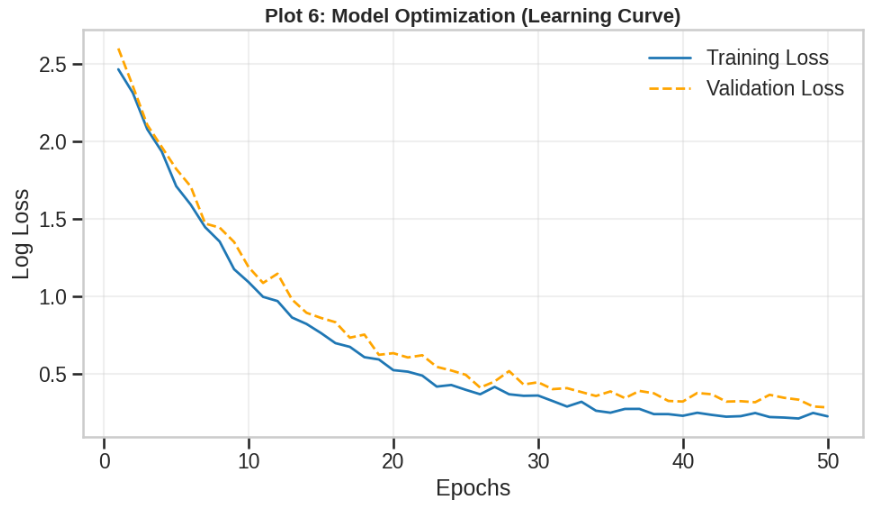

Plot 6: The Learning Curve

Watching the machine learn to think like a social worker.

The Revelation: How do we know this isn't random guessing? This plot tracks the model's error rate over time. In the beginning, it knows nothing (high error). But over 50 training cycles, it rapidly learns the complex grammar of homelessness.

The Insight: How do we know the AI isn't just guessing? This plot visualizes the system's education. The Y-axis represents the error rate (Loss). At the beginning (left side), the error is high because the model knows nothing. But as it processes training cycles (Epochs), the error drops dramatically. This curve is the mathematical proof that the system is successfully learning the complex grammar of homelessness. It confirms that the patterns it identifies, like the Burnout Plateau, are statistically significant realities, not random noise.

X-Axis (Epochs): The number of training cycles the AI has run (Time).

Y-Axis (Loss/Error): How many mistakes the AI makes. Lower is Better.

The Democratization of Data Science

The Wicked Problem:

Social service organizations navigate a complex intersection between the integration of advanced predictive analytics and a steadfast ethical commitment to client privacy. This landscape creates a systemic tension where the most sensitive lived experiences are intentionally managed outside of digital systems to preserve the 'right to be forgotten,' resulting in a critical gap of 'hidden' clinical wisdom that hinders comprehensive service coordination and housing stability.

The Project Rigel Solution:

This infrastructure does not just run code; it democratizes intelligence. By pairing the analytic power of Deep Learning (the plots above) with the linguistic capability of a Large Language Model (LLM), Project Rigel removes the technical barrier entirely.

Project Rigel is designed to significantly lower the technical barrier to advanced analytics while supporting inclusivity.The primary interface uses UX Tiles, purpose-designed workflow modules that allow any practitioner to access the system's full analytical capability by selecting the task that matches their current need. No coding, no prompt formulation, no technical background required.

For staff with greater technical proficiency, the underlying LLM layer remains accessible for open-ended queries and custom analytical requests.

Regardless of how a practitioner initiates a request, every interaction returns a structured, auditable output governed by the same Glass Box logic path standards

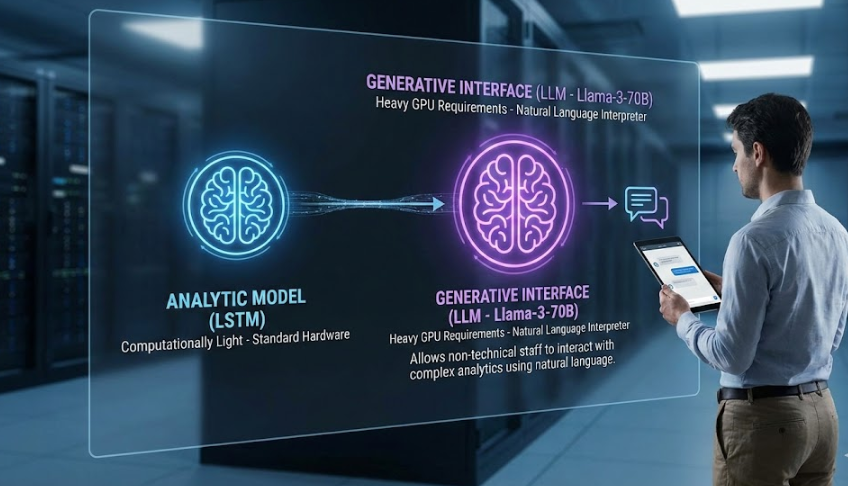

The “Two-Brain" Architecture

The predictive analytical LSTM models are computationally light and can run on standard hardware.

Why it is important to distinguish between the Analytic Model and the Generative Interface.

The Generative LLM (Llama-3-70B) will function as the "interpreter" of that data.

The heavy GPU requirements are specifically to support this generative layer, allowing non-technical staff to interact with complex analytics using natural language.

Final Vision: From Managing Crisis to Engineering Stability

In conclusion, Project Rigel represents a fundamental shift in how we address the "Wicked Problem" of homelessness. Historically, social service agencies have rigorously maintained HIPAA security protocols to protect the absolute privacy of their clients. This commitment is non-negotiable, but it has meant that the power of modern cloud-based AI was previously out of reach.

By deploying secure, on-premise GPU infrastructure to support Deep Learning within agency environments, we aim to validate and support the hard work of frontline case managers. The goal is to provide social workers with a distinct technological advantage that respects security standards while upgrading research capabilities from retrospective linear regression reporting to deep learning proactive prediction.

Project Rigel is not designed to replace the social worker with an algorithm. It is designed to augment their tireless efforts with the most advanced analytical tools of the 21st century. If Project Rigel garners support, this infrastructure would ensure that when a client walks through an agency's doors, dedicated practitioners would have the intelligence, the speed, and the precision to help their clients stay housed, permanently.